China's DeepSeek AI: 10x Gain for the Global Agent Economy

As 2024 ends, a small startup from China, DeepSeek, has delivered a game-changing AI model, perfectly timed for the rise of the Agent Economy in 2025. This open-source LLM outperforms top models like GPT-4 and Claude 3.5 but was trained for under $6M—less than 1/10 the cost of industry giants. Trade sanctions blocking Nvidia's cutting-edge H100 chips sparked this efficiency leap, achieved through a Mixture-of-Experts design, advanced load balancing and other insights. The full article explores how this dramatic drop in cost will reshape the AI Agent and VC capital landscape in 2025.Blog post description.

AIMULTI-AGENTSSTARTUPSGLOBAL MACRO

Edward Boyle

12/30/20245 min read

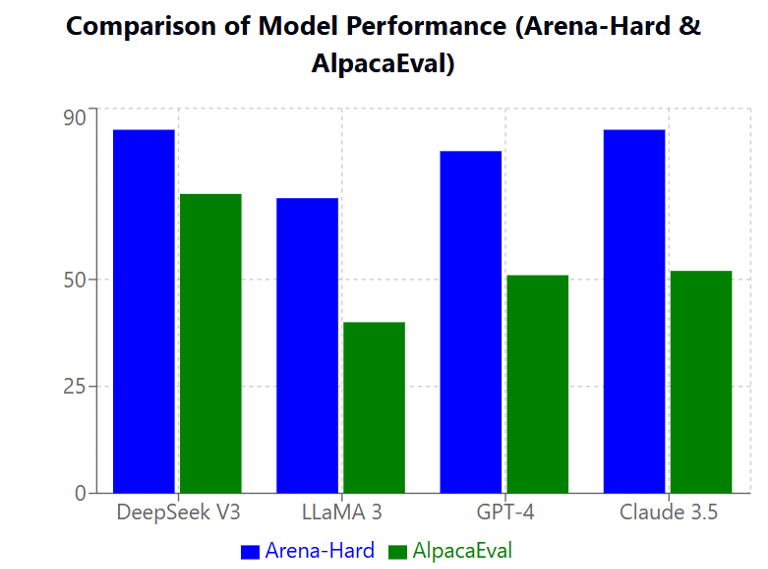

In the early 2000s, China's manufacturing revolution reshaped the global economy by dramatically reducing production costs while maintaining quality. Twenty years later, they're poised to do it again—this time with artificial intelligence. DeepSeek, a Chinese AI lab, has created an open-source AI system that outperforms current models like GPT-4 and Claude 3.5 while completely trouncing open-source offerings from Meta and others in key benchmarks, particularly in code and mathematical reasoning.

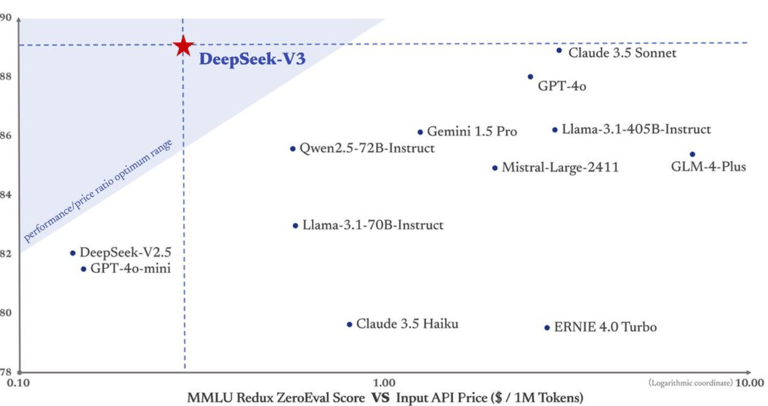

Just as low-cost Chinese imports fueled a global boom in consumer products and services, DeepSeek's breakthrough could catalyze an explosion in AI agent development. By slashing costs while maintaining elite performance, they're removing the primary barrier to widespread AI agent adoption. The following chart shows DeepSeek's leading MMLU score way inside the blue optimium range previously thought impossible.

DeepSeek: Performance / Price Advantage Costs on a Log Scale

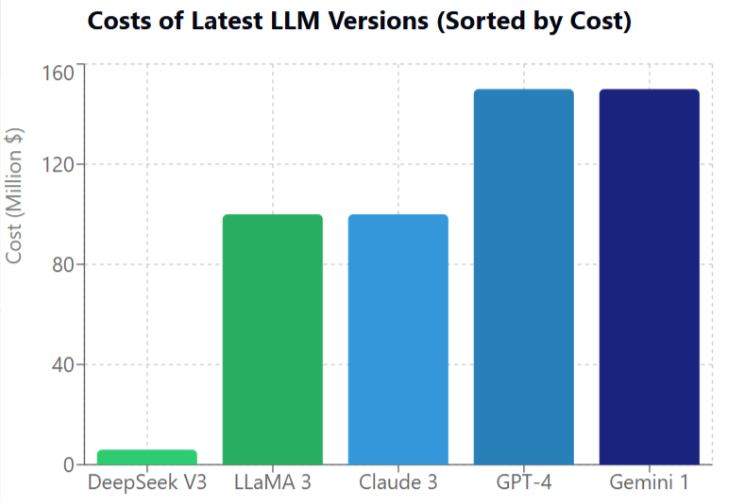

The kicker? They built it for just $6 million in two months—a fraction of the $100-150 million spent by Meta, OpenAI, and Google on their current versions. And while these companies may spend billions on future iterations, DeepSeek made their solution freely available to developers worldwide. This breakthrough emerged from an unexpected source: U.S. trade restrictions. When denied access to NVIDIA's prized H100 chips, DeepSeek didn't just adapt—they revolutionized the economics of AI training.

The innovation lies in DeepSeek's clever architecture. While competitors use expensive Nvidia H100 GPUs, DeepSeek achieved superior results with just 2,048 lower-cost H800 chips—about one-eighth the hardware typically required. Their Mixture-of-Experts design uses 671B total parameters but activates only 37B for each task, dramatically reducing compute needs. They pioneered new load-balancing strategies and an FP8 mixed precision framework that eliminated the usual instabilities in large model training. Most impressively, they distilled reasoning capabilities from their specialized R1 model into V3, achieving top-tier performance without the massive computational overhead of traditional approaches. So while some of the established companies may be claiming they are copying the pioneers, it seems like there are a lot of original ideas and they are all being open-sourced.

DeepSeek $6m vs. $100m to $150m

Performance That Rivals the Giants

DeepSeek V3 isn't just keeping pace—it's setting new standards. In critical benchmarks measuring reasoning, code generation, and mathematical problem-solving, it consistently matches or exceeds models that cost 100x more to develop:

DeepSeek Benchmark Performance

The model particularly shines in real-world applications, demonstrating superior performance in tasks like:

Complex mathematical reasoning with 85% accuracy

Code generation and debugging across multiple languages

Long-context understanding up to 128,000 tokens (15x longer than GPT-4 8k limit)

Multi-step logical reasoning and problem-solving

The Real Revolution: 10x Cost Reduction

While OpenAI is showing fantastic performance with their O1 and O3 models, those are as high as $3,000 per request, making them just a science experiment now. The equivalent request for DeepSeek V3 would be $0.01 to $0.03. Current model costs for input requests in million tokens are GPT-4 $30, Claude 3.5 $3 and DeepSeek $0.27—or 10x to 100x lower.

Input processing: $0.27 per million tokens

Output generation: $1.10 per million tokens

This 10x reduction in costs would completely transform the AI startup landscape. I'm currently advising ventures across AI, HealthTech, AR and Mobility sectors, and this news resets all their financial models and customer price points. Most were previously severely constrained by LLM pricing. Now, they're reimagining their entire business models.

Real-World Impact on Startup Economics

Consider these transformative examples:

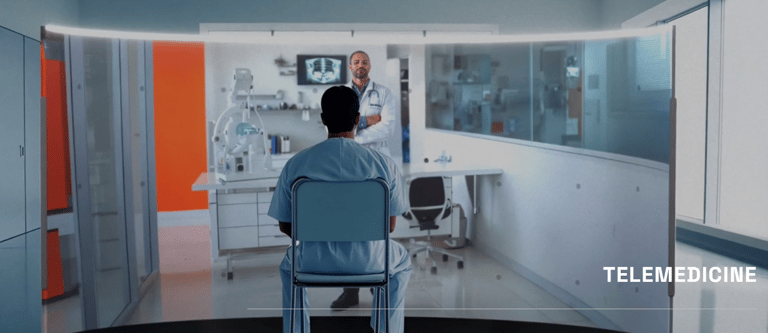

Healthcare Agent Swarm

A HealthTech startup I'm advising has developed an AI-powered telehealth platform combining a swarm of streaming avatars with expertise in a wide range of specialities. The high traditional LLM pricing was going to be passed to Healthcare Systems and Independent Provider groups making it a hard open-ended sale. With DeepSeek or competitive LLMs this changes:

Healthcare session costs will probably drop from $20-30/hour to $2-3/hour

Post-discharge 24/7 companion avatars now become economically viable

Multi-modal therapy (text, voice, video) at scale is possible instead of voice-only call centers

CEEVUE Immersive Conferencing System

Immersive Communications

An Augmented Reality startup revolutionizing video conferencing faced a similar pricing problem. Now with 10x lower LLM costs, their platform can enable:

Photorealistic Streaming Avatars assistants accompanying all sessions

Real-time translation and cultural adaptation of non-native speaking attendees

Real-time 3d processing of gestures and faces for engagement and sentiment feedback

Previous costs: $50/user/month New costs with DeepSeek: $5/user/month

AI CoPilot Air Traffic Control for VTOL and Drone Networks

Automated Air Networks

For another aviation startup, we have been working on plans for a semi-automated air traffic control network for both manned VTOL flights and commercial drones. The costs for 24/7 AI Agent support were a major concern. Now with the potential 10x drop in model costs, we can consider many of the following:

AI agents coordinate VTOL (vertical takeoff and landing) networks for urban mobility

Automated systems manage thousands of delivery drones for last-mile logistics

Real-time tracking and identification of all aerial vehicles

Smart routing optimizes flight paths while maintaining safety protocols

The recent mysterious drone sightings over New Jersey highlight the need for comprehensive airspace monitoring. With drastically lower cost models, many new options are now available.

Unlocking VC Capital for Agent Innovation

For the last 2 years, AI Venture Capital investment has been heavily concentrated in foundational model development, with companies raising billions just to train new LLMs while many innovative startups went begging for scraps. Now, DeepSeek's breakthrough in training efficiency has changed this landscape dramatically. Their innovation frees capital to flow toward innovative agent startups that don't need massive budgets to make the next evolutionary leap in AI.

The implications are transformative.

Agent startups can focus on solving real problems rather than fundraising for compute

Rapid prototyping and iteration become feasible at startup-friendly budgets

Specialized AI LLMs can proliferate for $6m each vs the prior $50m to $100m

Global accessibility opens doors for innovation beyond major tech hubs

As we witness this shift, one thing becomes clear: the AI startup landscape is being democratized. Companies can now compete based on creativity and use-case innovation rather than access to massive computing budgets. The question isn't whether to embrace this change, but how quickly you can reimagine your business in a world where advanced AI is finally accessible to all.